The blog post is written by Galia Novakova Nedeltcheva, Elisabetta Di Nitto, Hamta Sedghani and Danilo Ardagna

Can PIACERE and AI-SPRINT collaborate and integrate their results? The short answer is yes. This blog post aims at elaborating around the potential of such collaboration.

The main objective of the ongoing EU project AI-SPRINT[1] “Artificial intelligence in Secure PRIvacy-preserving computing coNTinuum” is to implement a design and runtime framework to accelerate the development of AI applications whose components are spread across the edge-cloud computing continuum. AI-SPRINT tools will allow trading-off application performance (in terms of end-to-end latency or throughput), energy efficiency, and AI models accuracy while providing security and privacy guarantees.

The overall AI-SPRINT objectives are:

- Develop simplified programming models

- Provide automated deployment and dynamic reconfiguration of AI applications

- Secure execution of AI applications

- Build highly specialized building blocks for privacy preservation, distributed training, and architecture enhancement

- Being open source

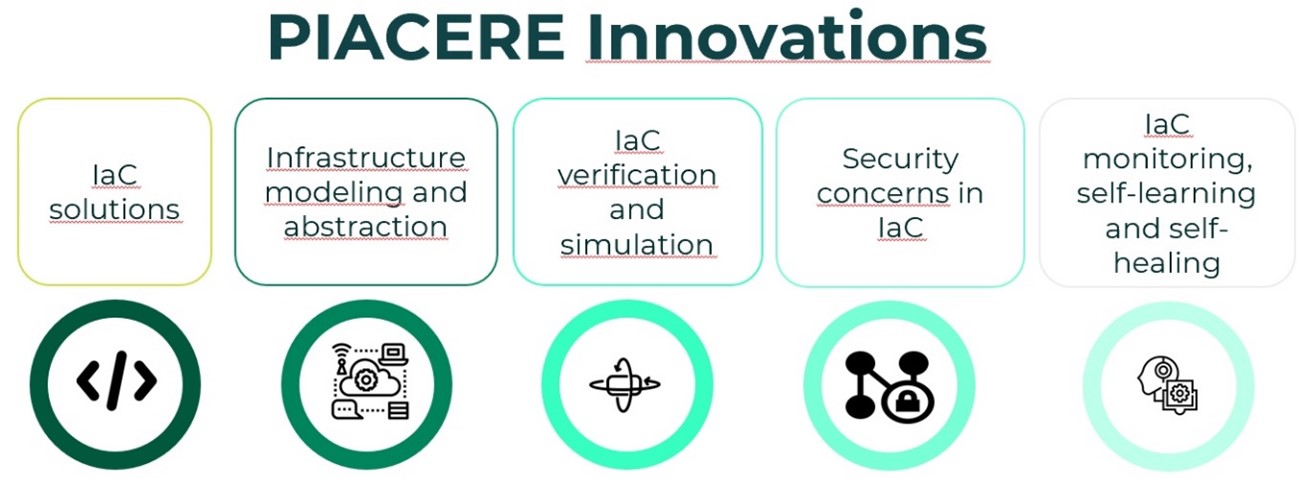

The PIACERE[2] project “Programming trustworthy Infrastructure As Code in a sEcuRE framework” is not focused on application development but, rather, on application deployment and operation as part of the DevSecOps lifecycle. PIACERE main objective is to develop a solution that covers the development, deployment, and operation of Infrastructure as Code (IaC), that is, the code created to deploy applications on the computing continuum. Essentially, the PIACERE framework approach aims at supporting the DevSecOps activities concerning IaC, and at shortening the learning curve for new DevSecOps teams. Overall, PIACERE innovations (see Figure 1) brings the following benefits:

- Making the creation of infrastructural code more accessible to the DevSecOps teams

- Increasing the quality, security, trustworthiness and evolvability of infrastructural code

- Ensuring business continuity by providing self-healing mechanisms in anticipation of failures and violations

- Allowing IaC to self-learn from previous conditions that triggered unexpected situations

Figure 1. PIACERE

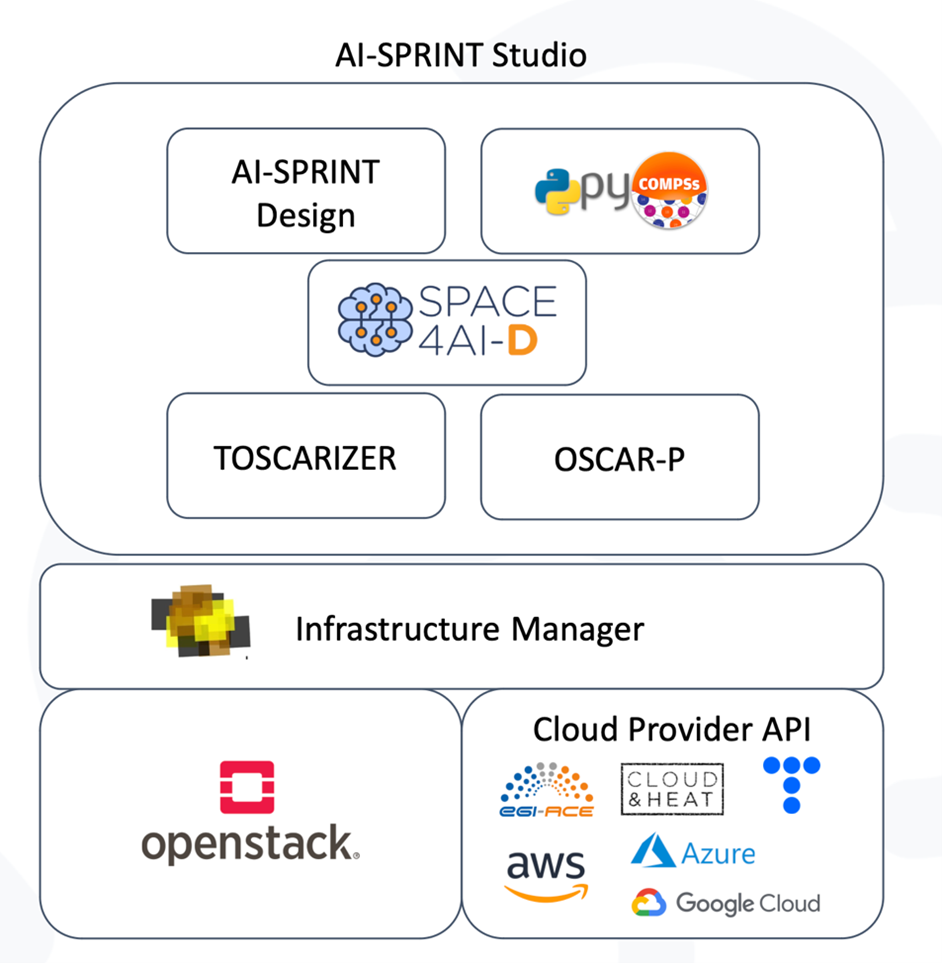

AI-SPRINT and PIACERE, then, focus on different but complementary facets, with AI-SPRINT addressing the development and optimal execution of AI applications and PIACERE proposing a DevSecOps toolset for IaC. AI-SPRINT Studio is focusing on application development and, possibly, optimization (see Figure 2). Within AI-SPRINT Studio, the AI developer can access a suite of tools helping the design, deployment, profiling, and optimization of AI applications [1]. AI-SPRINT Design provides high level abstractions in the form of Python annotations that hide to the developer the complexity related, on one hand, to the AI application design (supporting, e.g., the description of early exits or model drift detection and also Deep Neural Networks, DNNs, partitioning) and, on the other hand, to the computing continuum (allowing the definition of multiple resources candidates for the deployment, alternative components/partitions placement and the definition of performance constraints in terms of latency or throughput). PyCOMPSs supports the automatic parallelization of code by the specification of Python function input/output parameters read and write level. TOSCARIZER creates the Docker images and TOSCA files for the automatic deployment of application components that is supported by the Infrastructure Manager (which is compliant with the Open Stack API and implements many adapters and allows a transparent deployment on multiple clouds). Once deployed, OSCAR-P supports the automated profiling of the AI application on multiple hardware/software combinations and the generation of performance models to predict AI application components performance on unseen configurations. Finally, SPACE4AI-D identifies the most convenient/optimal resource set and components/partitions placement to maximize efficiency and minimize cost, by using a random greedy algorithm combined with several heuristics (e.g., Local search, Tabu search, Simulated annealing, and Genetic algorithm).

Figure 2. AI-SPRINT design time components

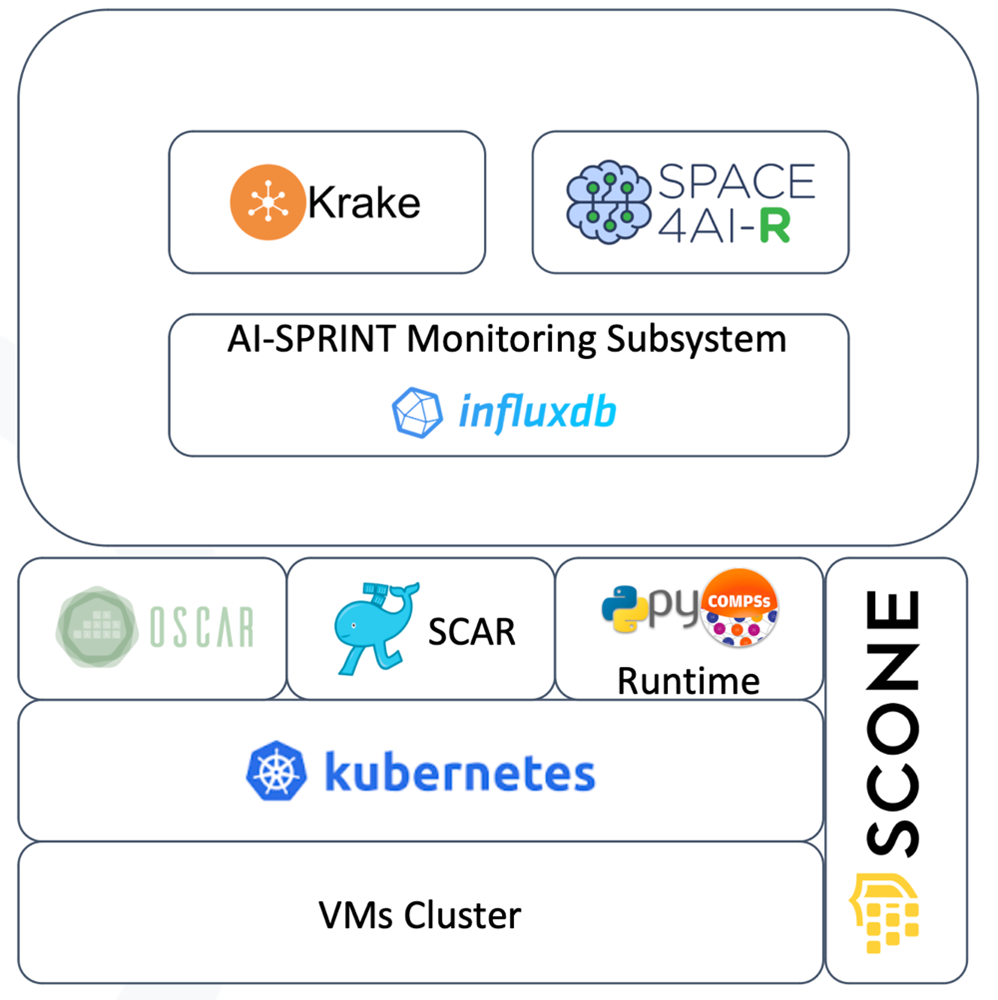

The objective of the AI-SPRINT Runtime (see Figure 3) is to support the monitoring and adaptation of the AI application running across the computing continuum [2]. All AI-SPRINT application components are dockerized and the containers execution is orchestrated by Kubernetes. AI application inference and training are supported by different runtime environments. The AI application inference is supported by the OSCAR and SCAR frameworks that allow the seamless execution of the AI application according to the FaaS (Functions-as-a-Service) paradigm. Moreover, load variations may induce resource saturation or underutilization, which may have a strong impact on components response times and execution costs. So, a monitoring system [3] to gather both infrastructure and application metrics based on InfluxDB is adopted. The Runtime environment has therefore the goal of adapting the component placement to account for such events, by migrating component executions from edge to cloud and vice versa, by scaling the number of cloud resources and/or by changing DNN models partitions configuration. The adaptation is based on SPACE4AI-R (the SPACE4AI-D runtime counterpart implementing a fast random tabu search heuristic) if performance metrics are concerned (e.g., application latency) or Krake if energy metric are concerned. For example, Krake can support the runtime migration of AI application components from one edge data center to another if better energy sources are available to reduce the CO2 emission of the application. AI application training is based on the PyCOMPSs runtime which allows distributing AI models training and hyperparameters tuning on multiple (possibly GPU-based) nodes. Finally, SCONE is the AI-SPRINT secure solution [4] that transparently and automatically supports the execution of application components in Trusted Execution Environments, protecting the application execution also in environments where the opponent has root access to the underlying hardware or virtualized resources.

Figure 3. AI-SPRINT runtime environment

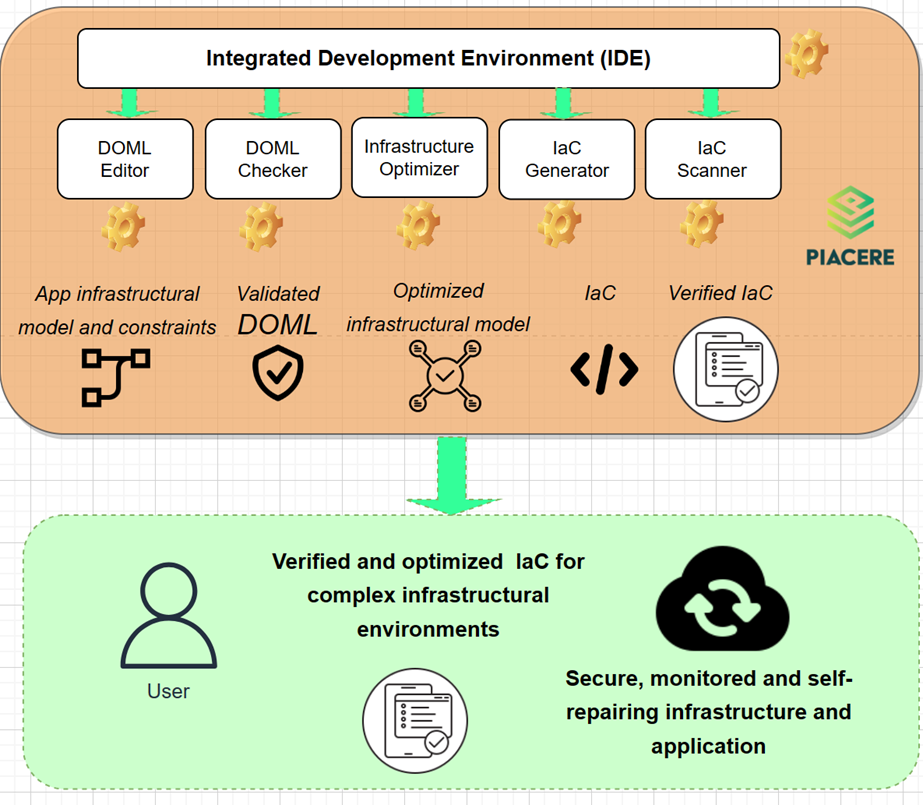

Figure 4. PIACERE design time components

The PIACERE design time environment features a DevSecOps Modelling Language (DOML) and tools for the automated deployment (see Figure 4). PIACERE DevSecOps Modelling Language (DOML) [5] [6] [7] [8] is a domain-specific language designed for modelling cloud applications and infrastructural resources, hiding the specificities, and technicalities of current IaC solutions and increasing the productivity of these teams. DOML improves the ability of (non-)expert DevSecOps teams to model provisioning, deployment, and configuration through the abstraction of execution environments and composing them into machine-readable representations. It allows DevSecOps teams to select and combine the abstractions with the purpose of creating a correct infrastructure provisioning, configuration management, deployment, and self-healing model. The main objective of the DOML has been to reduce the effort needed to automate the deployment and operation of an application in combination with its underlying infrastructure. This has resulted in the development of a high-level modelling language that is then translated through the ICG into de-facto IaC standard languages supporting the Ops phases of the software lifecycle. DOML models are created using the PIACERE IDE [9], which provides users with guidance, and it also integrates all other design-time PIACERE tools. Then, the DOML models are translated through the Infrastructural Code Generator (ICG) [10], [11], into the target IaC languages for complex applications. When the DOML model describes the desired infrastructure and its characteristics, the ICG generates IaC code that realizes both the provisioning and configuration of the target infrastructure. Along with IaC code for provisioning the infrastructure, ICG also generates IaC files to install both the PIACERE security and monitoring agents on every Virtual Machine defined in the target infrastructure, thus making it observable and more secure. A crucial aspect while developing IaC scripts is to ensure their internal coherency and the absence of potential vulnerabilities. In this respect, PIACERE features a DOML verification component as well as a IaC Security Scan Runner [11], [12]. The PIACERE IaC Optimized Platform (IOP) [13] provides to the user the possibility of obtaining optimized deployments based on his/her needs. The IOP is flexible enough to allow the user to define which objectives should be optimized (such as the cost and performance), and which are the main requirements that should be met (such as the minimum availability or the use of the resources of a certain provider). The IOP is an optimization tool which is capable to model and solve single-objective, multi-objective and many-objective optimization problems depending on the user need. Also, the user is able to introduce its non-functional requirements, requiring to the algorithm to build the optimization search space accordingly. From the overview of their respective components, it should be clear that AI-SPRINT and PIACERE include some complementary features, which could be possibly integrated, as well as some others that are similar and could be compared with each other to assess their effectiveness and flexibility. Thus, possible actions for cooperation are the following:

- AI-SPRINT could assess security breaches in the optimal deployments through the analysis of the Infrastructure Manager TOSCA recipes with the PIACERE IaC Security Scan Runner.

- PIACERE could exploit OSCAR-P to profile applications and optimize their usage of resources thus enabling the possibility to deploy them not only on the cloud but also at the edge.

- PIACERE and AI-SPRINT together could experiment with the usage of the PIACERE DOML to model application deployments and with the translation of DOML models into TOSCA to take then advantage of the AI-SPRINT runtime tools.

Finally, the collaboration between both projects could increase their awareness of the developed frameworks capabilities and could lead to new ideas for improvement and impact creation.

[1] https://ai-sprint-project.eu/components

[2] https://piacere-project.eu/innovation-assets/

References

| [1] | D. Lezzi et al., “Deliverable D2.1 First release and evaluation of the AI-SPRINT design tools,” Dec. 2021, AI-SPRINT Consortium. |

| [2] | G. Moltò et al., “Deliverable D3.1 First release and evaluation of the runtime environment,” Dec. 2021, AI-SPRINT Consortium. |

| [3] | R. Ostrzycki et al., “Deliverable D3.2 First release and evaluation of the monitoring system,” Dec. 2021, AI-SPRINT Consortium. |

| [4] | A. Martin, G. Verticale, “Deliverable D4.1 Initial Release and Evaluation of the Security Tools,” Dec. 2021, AI-SPRINT Consortium. |

| [5] | S. Canzoneri, E. Di Nitto, “Deliverable 3.3 PIACERE Abstractions, DOML and DOML-E – v3,” May 2023, PIACERE Consortium. |

| [6] | PIACERE consortium, “PIACERE DOML Specification v 3.0,” Oct. 2022. |

| [7] | S. Canzoneri, “DOML v3.0 tutorial,” June 2023, PIACERE Consortium. |

| [8] | Morganti, Emanuele, “Deliverable 2.2 PIACERE DevSecOps Framework Requirements specification, architecture and integration strategy – v2,” Dec. 2022, PIACERE Consortium. |

| [9] | Eliseo Villanueva, “Deliverable 3.9 PIACERE IDE – v3,” June 2023, PIACERE Consortium. |

| [10] | J. Díaz de Arcaya, “Deliverable 5.3 IaC execution platform prototype – v3,” May 2023, PIACERE Consortium. |

| [11] | D. Benedetto, L. Blasi, and L. Niculut, “Deliverable D3.6 Infrastructural code generation – v3,” May 2023, PIACERE Consortium. |

| [12] | Matija Cancar, “Deliverable 4.6 IaC Code Security and components security Inspection – v3,” May 2023, PIACERE Consortium. |

| [13] | Eneko Osaba, Iñaki Etxaniz, Gorka Benguria, “Deliverable 5.9 IOP Prototype – v3,” May 2023, PIACERE Consortium. |

The blog post is written by Galia Novakova Nedeltcheva, Elisabetta Di Nitto, Hamta Sedghani and Danilo Ardagna

0 Comments